Optimal Transport Theory: The Hidden Bridge in Machine Learning

Imagine two cities separated by a river. Each city has resources that need to be delivered to the other side—efficiently, fairly, and with minimal cost. The art of planning those routes, ensuring nothing is wasted and every resource reaches its ideal destination, lies at the heart of Optimal Transport Theory. In the world of Machine Learning (ML), this concept has evolved into a mathematical compass guiding how models learn, adapt, and generalise across domains.

Optimal transport is not about roads and vehicles—it’s about probability distributions, data points, and cost functions. It provides ML practitioners with a way to map one data landscape to another, reducing inefficiency and improving learning precision.

The Essence of Optimal Transport

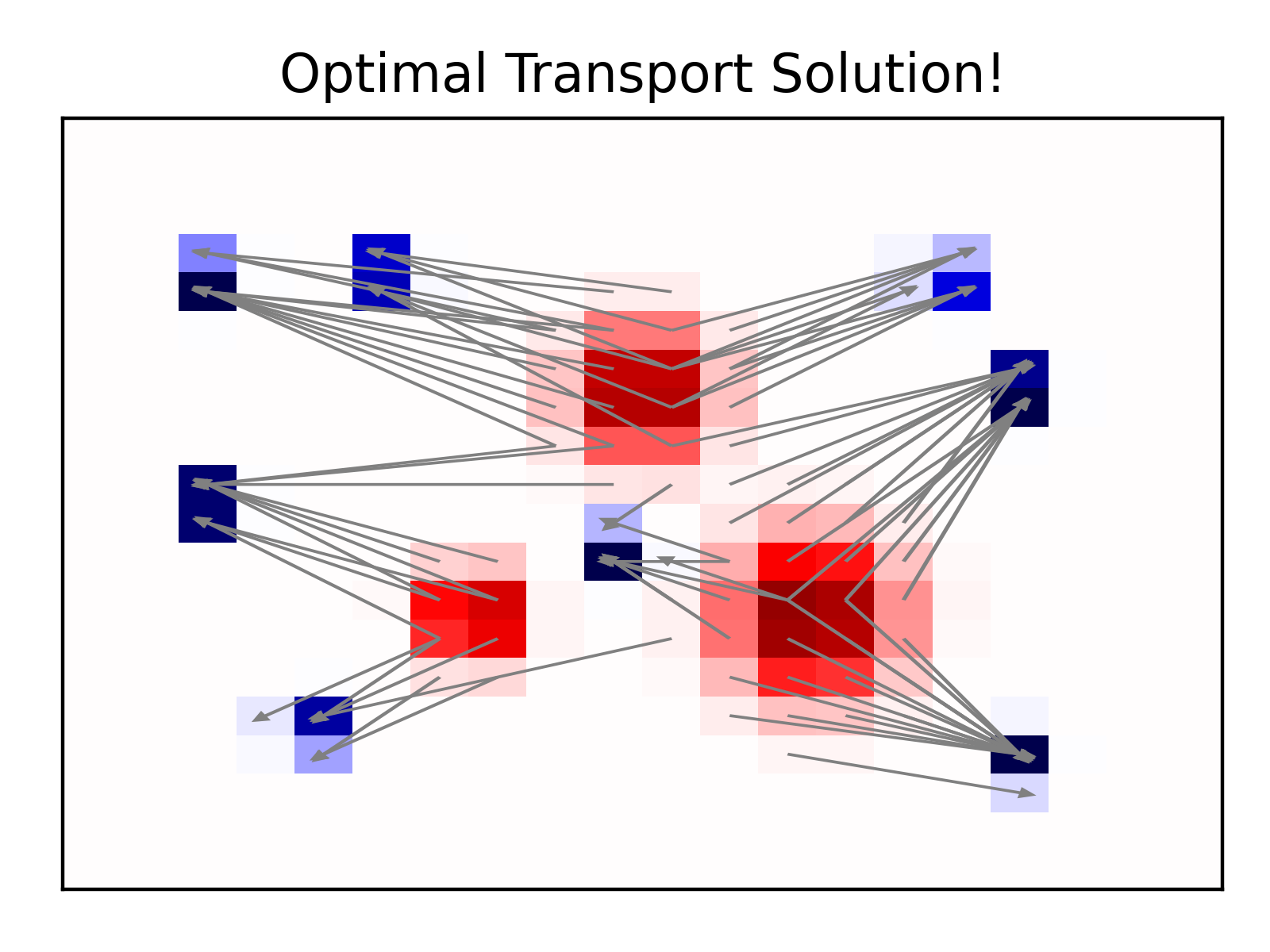

At its core, optimal transport deals with moving “mass” (which can represent probability or data density) from one distribution to another at the lowest possible cost. In ML, this mass could represent pixels in an image, data clusters in a dataset, or even feature spaces between two domains.

For example, in generative modelling, a neural network tries to convert a simple distribution—like random noise—into something meaningful, such as a human face or a landscape. Optimal transport defines the “cost” of this transformation, helping the model find the most efficient path between input and output spaces.

Students enrolling in an artificial intelligence course in Bangalore often explore this principle to understand how distance metrics like the Earth Mover’s Distance (EMD) enhance training stability and performance in advanced neural networks.

Applications in Robust Loss Functions

Training ML models is much like teaching a child to balance on a tightrope. Too many corrections can lead to overfitting; too few can result in poor generalisation. Traditional loss functions, such as Mean Squared Error (MSE), often fall short when dealing with outliers or non-linear distributions.

This is where optimal transport introduces Wasserstein loss—a metric that measures the effort required to turn one probability distribution into another. It’s more stable, more meaningful, and provides a smoother gradient landscape. This stability allows models to learn complex relationships even in noisy datasets, making it invaluable for deep learning applications.

Through hands-on training, learners in an artificial intelligence course in Bangalore encounter these techniques when experimenting with GANs (Generative Adversarial Networks), where robust loss functions make the difference between blurry and realistic image outputs.

Generative Modelling: Mapping Creativity

Generative models are among the most imaginative applications of optimal transport. Think of them as artists who learn not from words but from patterns in data. GANs, VAEs (Variational Autoencoders), and Diffusion Models all rely on optimal transport concepts to align generated data with real-world samples.

The Wasserstein GAN (WGAN), for instance, uses optimal transport to measure how closely the generated data distribution matches the real one. This approach eliminates instability issues seen in older models and ensures that learning progresses steadily. By quantifying how “far apart” two datasets are, optimal transport transforms subjective creativity into measurable learning.

Domain Adaptation: Teaching Models to Generalise

In real-world applications, models often face data that differs from what they were trained on. For example, an image classifier trained on clear daytime photos might fail on foggy nighttime images. This discrepancy between training and deployment environments is known as domain shift.

Optimal transport bridges this gap by mapping one domain to another. It aligns feature spaces so that even when the input changes, the model can still make accurate predictions. This makes it essential for cross-domain learning—whether it’s adapting medical imaging models across hospitals or translating language models across dialects.

By adjusting the “cost” of transformation between domains, optimal transport ensures that models remain adaptable and robust even when the environment shifts unexpectedly.

Beyond Mathematics: Why It Matters

Optimal transport isn’t just another abstract theory—it’s the foundation of fairness, precision, and adaptability in AI. It allows algorithms to find harmony between diverse datasets, to balance exploration with optimisation, and to move seamlessly between worlds of structured and unstructured information.

For professionals looking to master this balance, understanding how optimal transport interacts with neural networks and data representations is becoming an essential skill. It demonstrates how mathematics can bring elegance to machine learning design—where every computation has purpose, and every transformation follows logic.

Conclusion

Optimal Transport Theory represents the elegant choreography of movement in the digital world. It’s how data distributions learn to align, how models evolve to understand their environments, and how AI systems achieve both efficiency and fairness. Whether applied to robust loss functions, generative models, or domain adaptation, it provides the grounding for modern, reliable ML architectures.

As AI continues to evolve, the principles of optimal transport remind us that behind every technological advancement lies a structured path of optimisation—an art of moving from chaos to clarity, from randomness to reasoning.